DevExpress AI-powered Extensions for Blazor

- 12 minutes to read

Use the following links for details on how to add AI-powered functionality to DevExpress Blazor components:

- AI Chat Component

- AI-powered Document Editing for HTML Editor

- AI-powered Document Editing for Rich Text Editor

- AI-powered Smart Autocomplete for Memo

- AI-powered Report Viewer and Report Designer

How it Works

DevExpress AI APIs leverage the Microsoft.Extensions.AI libraries for integration and interoperability with a wide range of AI services. These libraries establish a unified C# abstraction layer for standardized interaction with language models.

This architecture decouples your application code from specific AI SDKs. You can seamlessly switch the underlying AI model or provider with minimal code modifications. For example, you can build a prototype with a locally deployed AI model and then quickly transition to an enterprise-grade online LLM provider. These changes only involve adjustments to the app’s startup logic and the installation of necessary NuGet packages.

The IChatClient interface serves as the central mechanism for language models interaction. Currently, supported AI providers include:

- OpenAI (through Microsoft’s reference implementation)

- Azure OpenAI (through Microsoft’s reference implementation)

- Self-hosted Ollama (through the OllamaSharp library)

- Google Gemini, DeepSeek, Claude, and other major AI services through Semantic Kernel AI Connectors

- Custom

IChatClientimplementation for unsupported providers or private language models.

The Microsoft.Extensions.AI framework allows developers to integrate support for AI language models and services without modifying the core library. This means you can leverage third-party libraries for new AI providers or create your own custom implementation for in-house language models.

Note

DevExpress AI-powered extensions operate on a “bring your own key” (BYOK) model. We do not provide a proprietary REST API or bundled language models (LLMs/SLMs).

You can either deploy a self-hosted model or connect to a cloud AI provider and obtain necessary connection parameters (endpoint, API key, language model identifier, and so on). These parameters must be configured at application startup to register an AI client and enable extension functionality.

Prerequisites

- .NET 8 SDK or above

AI language model (choose one of the following):

OpenAI Service

- Create an OpenAI account

- Create a secret key to access the OpenAI API

- Subscribe for OpenAI API

Azure OpenAI Service

- Create an Azure account

- Create and deploy an Azure OpenAI resource

- Get Azure OpenAI key and endpoint

Ollama (self-hosted models)

- Download and install Ollama

- Pull a model from the Ollama library

- Run the downloaded model with

ollama run <model_name>command

Foundry Local (self-hosted models)

- Explore the Foundry Local model catalog and select a model alias (ID) that meets your requirements.

The Foundry Local SDK is self-contained and handles model download and execution independently. No separate downloads or installation is required.

- Explore the Foundry Local model catalog and select a model alias (ID) that meets your requirements.

ONNX Runtime (self-hosted models)

- Get an ONNX model:

- Download ONNX model files from Hugging Face or ONNX Model Zoo.

Use the Hugging Face CLI to download models. - Convert existing TensorFlow or PyTorch models to ONNX with tf2onnx or the PyTorch ONNX export. For Transformer models, see Hugging Face Optimum — ONNX Runtime.

- Download ONNX model files from Hugging Face or ONNX Model Zoo.

- Get an ONNX model:

Semantic Kernel

- Search for the available AI connector or implement your custom connector

- Subscribe to the desired AI service if needed

AI Services Integration

Follow the instructions below to register an AI model and enable DevExpress AI-powered Extensions in your application.

Important

Never hardcode AI provider access keys, credentials, or API endpoints directly in your source code. Refer to the following help topic for additional information: Secret Management for Blazor AI Components.

OpenAI

Install the following NuGet packages to your project:

- DevExpress.Blazor

- DevExpress.AIIntegration.Blazor

- Microsoft.Extensions.AI (version 9.7.1 or later)

- Microsoft.Extensions.AI.OpenAI (version 9.7.1-preview.1.25365.4 or later)

Register the OpenAI model in the project’s entry point class:

using Azure.AI.OpenAI; using Microsoft.Extensions.AI; using OpenAI; /* ... */ string openAiApiKey = Environment.GetEnvironmentVariable("OPENAI_API_KEY") ?? throw new InvalidOperationException("OPENAI_API_KEY environment variable is not set."); string openAiModel = "OPENAI_MODEL"; OpenAIClient openAIClient = new OpenAIClient(openAiApiKey); IChatClient openAiChatClient = openAIClient.GetChatClient(openAiModel).AsIChatClient(); builder.Services.AddChatClient(openAiChatClient);- Create an environment variable named

OPENAI_API_KEYand set its value to your OpenAI API key. If your application throws an exception that the variable is not found, restart your IDE or terminal to ensure they load the new variable. - Set the

openAiModelvariable to the OpenAI model ID.

- Create an environment variable named

Register DevExpress Blazor services and DevExpress AI-powered extensions in the project’s entry point class:

builder.Services.AddDevExpressBlazor(); builder.Services.AddDevExpressAI();

Azure OpenAI

Install the following NuGet packages to your project:

- DevExpress.Blazor

- DevExpress.AIIntegration.Blazor

- Microsoft.Extensions.AI (version 9.7.1 or later)

- Microsoft.Extensions.AI.OpenAI (version 9.7.1-preview.1.25365.4 or later)

- Azure.AI.OpenAI (version 2.2.0-beta.5 or later)

Register the Azure OpenAI model in the project’s entry point class:

using Azure.AI.OpenAI; using Microsoft.Extensions.AI; using System.ClientModel; /* ... */ string azureOpenAiKey = Environment.GetEnvironmentVariable("AZURE_OPENAI_KEY") ?? throw new InvalidOperationException("AZURE_OPENAI_KEY environment variable is not set."); string azureOpenAiEndpoint = "AZURE_OPENAI_ENDPOINT"; string azureOpenAiModel = "AZURE_OPENAI_MODEL"; AzureOpenAIClient azureOpenAIClient = new AzureOpenAIClient( new Uri(azureOpenAiEndpoint), new ApiKeyCredential(azureOpenAiKey) ); IChatClient azureOpenAiChatClient = azureOpenAIClient.GetChatClient(azureOpenAiModel).AsIChatClient(); builder.Services.AddChatClient(azureOpenAiChatClient);- Create an environment variable named

AZURE_OPENAI_KEYand set its value to your Azure OpenAI key. If your application throws an exception that the variable is not found, restart your IDE or terminal to ensure they load the new variable. - Set the

azureOpenAiEndpointvariable to your Azure OpenAI endpoint. - Set the

azureOpenAiModelvariable to the Azure OpenAI model ID.

- Create an environment variable named

Register DevExpress Blazor services and DevExpress AI-powered extensions in the project’s entry point class:

builder.Services.AddDevExpressBlazor(); builder.Services.AddDevExpressAI();

Ollama

Install the following NuGet packages to your project:

- DevExpress.Blazor

- DevExpress.AIIntegration.Blazor

- Microsoft.Extensions.AI (version 9.7.1 or later)

- OllamaSharp

Register the self-hosted AI model in the project’s entry point class:

using Microsoft.Extensions.AI; using OllamaSharp; /* ... */ string aiModel = "MODEL_NAME"; IChatClient chatClient = new OllamaApiClient("http://localhost:11434", aiModel); builder.Services.AddChatClient(chatClient);Set the

aiModelvariable to the name of your Ollama model.Register DevExpress Blazor services and DevExpress AI-powered extensions in the project’s entry point class:

builder.Services.AddDevExpressBlazor(); builder.Services.AddDevExpressAI();

Foundry Local

Note

Foundry Local is available as a public preview. Features, approaches, and processes can change or have limited capabilities before General Availability (GA).

Select a target platform for your app. There are two NuGet packages for the Foundry Local SDK - a Windows-specific and a cross-platform package. These packages have the same API surface but are optimized for different platforms.

Windows 10 (x64), Windows 11, and Windows Server 2025

- Change your project configuration (IDE settings or

.csprojfile):- Set the target OS to Windows.

- Set the target OS version to 10.0.26100.0 or later.

- Install Microsoft.AI.Foundry.Local.WinML NuGet package (version 0.8.2.1 or later).

- Change your project configuration (IDE settings or

Cross-platform (Windows, Linux, macOS)

- Install Microsoft.AI.Foundry.Local NuGet package (version 0.8.2.1 or later).

Install the following NuGet packages to your project:

- DevExpress.AIIntegration.Blazor

- Microsoft.Extensions.AI (version 9.7.1 or later)

- Microsoft.Extensions.AI.OpenAI (version 9.7.1-preview.1.25365.4 or later)

- OpenAI (version 2.2.0 or later)

Add a method that registers a Foundry Local client in the project’s entry point class:

using Microsoft.AI.Foundry.Local; using Microsoft.Extensions.AI; using OpenAI; using System.ClientModel; /* ... */ static async Task<IChatClient> RegisterFoundryLocalClient(string modelAlias, ILoggerFactory loggerFactory) { // Create a named logger var logger = loggerFactory.CreateLogger("FoundryLocal"); // Configure Foundry Local service var foundryLocalConfiguration = new Configuration { AppName = "BlazorAIApp", LogLevel = Microsoft.AI.Foundry.Local.LogLevel.Information, Web = new Configuration.WebService() { Urls = "http://127.0.0.1:52495" } }; // Initialize Foundry Local Manager await FoundryLocalManager.CreateAsync(foundryLocalConfiguration, logger, null); var manager = FoundryLocalManager.Instance; // Get the model catalog var catalog = await manager.GetCatalogAsync(); // Get the specified model var model = await catalog.GetModelAsync(modelAlias) ?? throw new Exception($"Model {modelAlias} not found"); // Download the model if not cached if(!await model.IsCachedAsync()) { await model.DownloadAsync(); } // Load the model await model.LoadAsync(); // Start the Foundry Local web service await manager.StartWebServiceAsync(); // Create an OpenAI client pointing to the Foundry Local web service OpenAIClient client = new OpenAIClient(new ApiKeyCredential("none"), new OpenAIClientOptions { Endpoint = new Uri(foundryLocalConfiguration.Web.Urls + "/v1"), }); return client.GetChatClient(model.Id).AsIChatClient(); }Add a cleanup service that unloads the model and releases resources during application shutdown. Without a cleanup service, the Foundry model can remain loaded and the local web service can continue running.

public class FoundryLocalCleanupService : IHostedService { public Task StartAsync(CancellationToken cancellationToken) => Task.CompletedTask; public async Task StopAsync(CancellationToken cancellationToken) { var manager = FoundryLocalManager.Instance; var catalog = await manager.GetCatalogAsync(); var model = await catalog.GetModelAsync("phi-4-mini"); if(model != null) await model.UnloadAsync(); } }Register the Foundry Local AI model and a cleanup service in the project’s entry point class:

// Create a LoggerFactory and configure console logging using var loggerFactory = LoggerFactory.Create(builder => { builder .SetMinimumLevel(Microsoft.Extensions.Logging.LogLevel.Information) .AddSimpleConsole(options => { options.SingleLine = true; options.TimestampFormat = "HH:mm:ss "; }); }); // Register a Foundry Local client with Microsoft Phi-4 model string modelName = "phi-4-mini"; IChatClient chatClient = await RegisterFoundryLocalClient(modelName, loggerFactory); builder.Services.AddSingleton(chatClient); // Register the cleanup service builder.Services.AddHostedService<FoundryLocalCleanupService>();Register DevExpress Blazor services and DevExpress AI-powered extensions in the project’s entry point class:

builder.Services.AddDevExpressBlazor(); builder.Services.AddDevExpressAI();

First Run

On the first run, Foundry Local SDK detects your machine’s capabilities and selects the model optimized for your hardware. If the selected model is not available locally, the SDK contacts the Microsoft AI Foundry registry and downloads the model weights (for Phi-4-mini, typically 2–4 GB). The SDK stores the downloaded model in a local cache. Subsequent runs load the model from cache without re-downloading.

Because the first run requires downloading several gigabytes, your Blazor app can take longer to start. To reduce startup delays, use the Foundry Local command-line interface (CLI) to download the selected model in advance.

ONNX Runtime

Install the following NuGet packages to your project:

- DevExpress.AIIntegration.Blazor

- Microsoft.Extensions.AI (version 9.7.1 or later)

- Microsoft.ML.OnnxRuntimeGenAI

You can use one of the following optimized libraries depending on your hardware:- Microsoft.ML.OnnxRuntimeGenAI.DirectML: Windows GPU acceleration via DirectML (AMD, NVIDIA, Intel)

- Microsoft.ML.OnnxRuntimeGenAI.Cuda: NVIDIA GPU acceleration via CUDA

- Microsoft.ML.OnnxRuntimeGenAI.WinML: Windows ML execution provider

- Microsoft.ML.OnnxRuntimeGenAI.QNN: Qualcomm NPU acceleration (Snapdragon)

- Microsoft.ML.OnnxRuntimeGenAI.Foundry: On-device acceleration with Microsoft Foundry Local

Register the self-hosted AI model in the project’s entry point class:

using Microsoft.Extensions.AI; using Microsoft.ML.OnnxRuntimeGenAI; /* ... */ string modelPath = @"C:\Models\phi-4-mini"; var config = new Config(modelPath); var onnxChatClient = new OnnxRuntimeGenAIChatClient(new Model(config)); IChatClient chatClient = onnxChatClient.AsBuilder().ConfigureOptions( x => x.MaxOutputTokens = 4096 ).Build(); builder.Services.AddSingleton(chatClient);Register DevExpress Blazor services and DevExpress AI-powered extensions in the project’s entry point class:

builder.Services.AddDevExpressBlazor(); builder.Services.AddDevExpressAI();

Semantic Kernel

The Semantic Kernel SDK provides a common interface to interact with different AI services. The Kernel communicates with AI services through AI Connectors, which expose multiple AI service types from different providers.

Semantic Kernel works with an ecosystem of ready-to-use connectors which support leading AI models from OpenAI, Google, Anthropic, DeepSeek, Mistral AI, Hugging Face, and more. You can also build custom connectors for any other service, such as your in-house language models.

The following example connects DevExpress AI-powered Extensions for Blazor to Google Gemini through the Semantic Kernel SDK:

Note

The Google chat completion connector is currently experimental. To acknowledge this and use the feature, you must explicitly suppress the compiler warnings with the #pragma warning disable directive.

- Sign in to Google AI Studio.

- Create an API key.

Install the following NuGet packages to your project:

Register the Gemini model in the project’s entry point class:

using Microsoft.Extensions.AI; using Microsoft.SemanticKernel; using Microsoft.SemanticKernel.ChatCompletion; /* ... */ string geminiApiKey = Environment.GetEnvironmentVariable("GEMINI_API_KEY") ?? throw new InvalidOperationException("GEMINI_API_KEY environment variable is not set."); string geminiAiModel = "GEMINI_MODEL"; #pragma warning disable SKEXP0070 var kernelBuilder = Kernel .CreateBuilder() .AddGoogleAIGeminiChatCompletion(geminiAiModel, geminiApiKey); Kernel kernel = kernelBuilder.Build(); #pragma warning disable SKEXP0001 IChatClient geminiChatClient = kernel.GetRequiredService<IChatCompletionService>().AsChatClient(); builder.Services.AddChatClient(geminiChatClient);- Create an environment variable named

GEMINI_API_KEYand set its value to your Gemini API key. If your application throws an exception that the variable is not found, restart your IDE or terminal to ensure they load the new variable. - Set the

geminiAiModelvariable to the Gemini model ID.

- Create an environment variable named

Register DevExpress Blazor services and DevExpress AI-powered extensions in the project’s entry point class:

builder.Services.AddDevExpressBlazor(); builder.Services.AddDevExpressAI();

Configure Inference Parameters

To control the AI model’s behavior and creativity, set inference parameters using IChatClient options. These parameters are configured once when you register the IChatClient service in the project’s entry point class. The settings then apply to all DevExpress AI-powered features, ensuring a consistent tone and style across your app.

The following code snippet configures an Azure OpenAI client that is moderately creative, avoids repeating itself, and produces reasonably detailed but not excessively long responses:

AzureOpenAIClient azureOpenAIClient = new AzureOpenAIClient(

new Uri(azureOpenAiEndpoint),

new ApiKeyCredential(azureOpenAiKey)

);

IChatClient azureOpenAIChatClient = azureOpenAIClient.GetChatClient(azureOpenAiModel).AsIChatClient();

IChatClient chatClient = new ChatClientBuilder(azureOpenAIChatClient)

.ConfigureOptions(options => {

options.Temperature = 0.7f;

options.MaxOutputTokens = 1200;

options.PresencePenalty = 0.5f;

})

.Build();

builder.Services.AddChatClient(chatClient);

Note

A specific IChatClient implementation might have its own internal representation of options. It may use a subset of options or ignore the provided options entirely.

Verify AI Service Connectivity

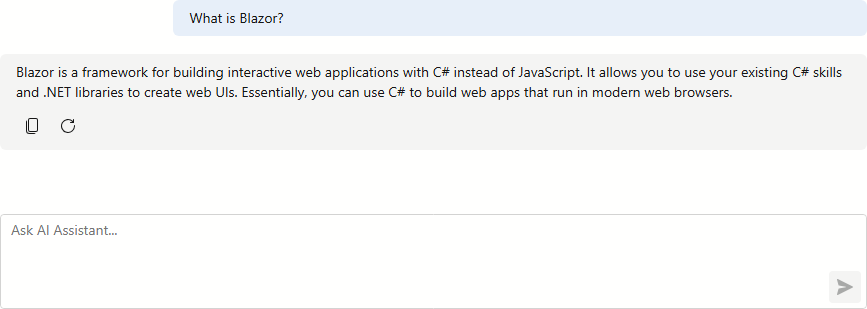

To verify connectivity with the configured AI service, add the DxAIChat component into your application.

@using DevExpress.AIIntegration.Blazor.Chat

@page "/"

@rendermode InteractiveServer

<PageTitle>DevExpress Blazor AI Chat</PageTitle>

<DxAIChat />

Send a test prompt and confirm a response is received.

Troubleshooting

This section describes common AI integration issues and steps you can follow to diagnose and resolve these issues. If the solutions listed here do not help, create a ticket in our Support Center and attach a reproducible sample project.

The AI chat responds with an “Internal Server Error“ message.

- Verify that the model name, API key, endpoint, and other AI service registration parameters are correct.

- For cloud AI providers, make sure you are online and that your firewall allows access to the provider’s endpoint.

- Confirm that the self-hosted language model service (for example, Ollama) is active and responsive.

“Environment variable is not set“ exception in Visual Studio.

- If you store AI service registration parameters in environment variables, confirm that all necessary environment variables are set.

- Restart Visual Studio to detect the newly created environment variable.